AI-powered transcription tool fabricates phrases that were never spoken

An AI-driven transcription tool is generating phrases that have never been uttered before.

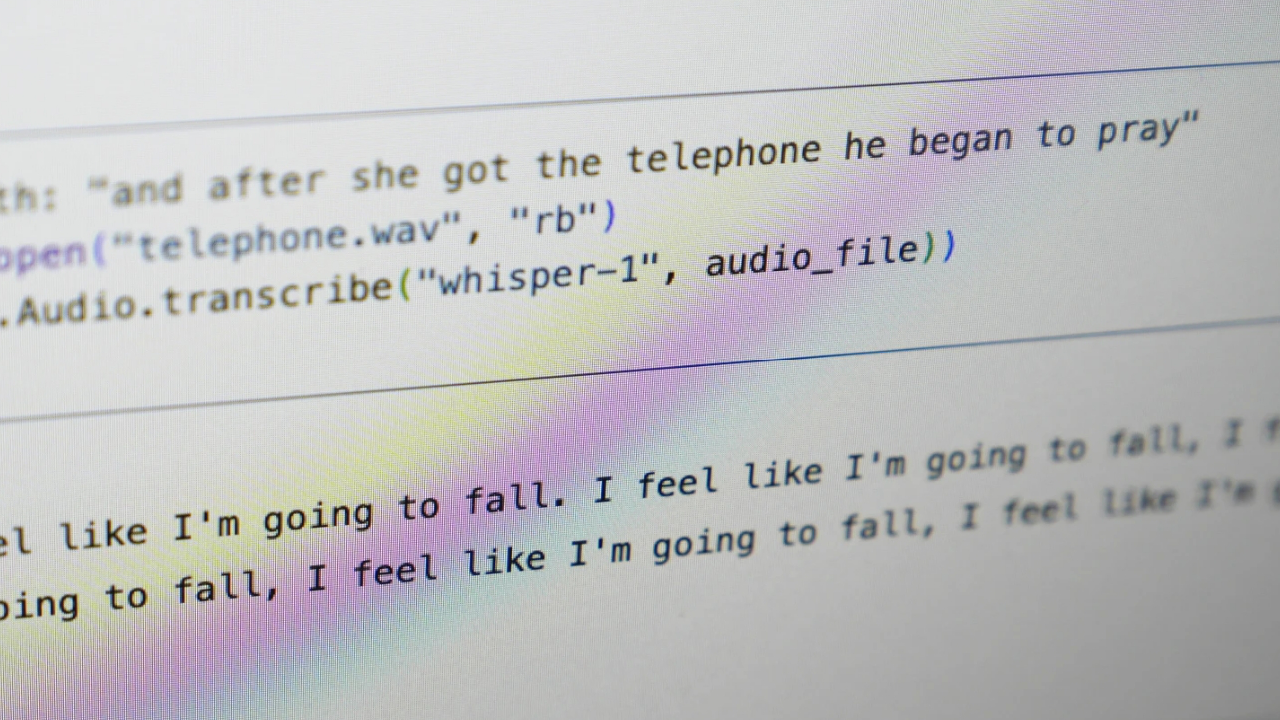

However, Whisper has a significant drawback: it frequently generates fictitious text or entire sentences, as noted in interviews with over a dozen software engineers, developers, and academic researchers. These experts indicated that some of the fabricated content—referred to in the industry as hallucinations—can encompass racial remarks, violent language, and even fictitious medical treatments.

Experts expressed concern over these fabrications, especially since Whisper is increasingly being adopted across various industries globally to transcribe and translate interviews, generate text in popular consumer applications, and create video subtitles.

An additional worry is the rapid adoption of Whisper-based tools by medical facilities to document patient consultations, despite OpenAI's cautions about the use of the tool in "high-risk domains."

While the full scope of the issue is challenging to gauge, both researchers and engineers reported frequently encountering Whisper's hallucinations in their projects. For instance, a researcher from the University of Michigan, studying public meetings, discovered hallucinations in eight out of ten audio transcriptions he reviewed before he began working on model improvements.

Another machine learning engineer noted that he first identified hallucinations in about half of the more than 100 hours of Whisper transcriptions he analyzed. A third developer found errors in nearly all of the 26,000 transcripts generated using Whisper.

The issues persist even with well-recorded, short audio samples. A recent study by computer scientists identified 187 hallucinations within over 13,000 clear audio snippets they assessed.

This trend could result in tens of thousands of inaccurate transcriptions across millions of recordings, researchers warned.

Anna Muller contributed to this report for TROIB News

Discover more Science and Technology news updates in TROIB Sci-Tech